This is an introductory course in the theory of statistics, inference, and machine learning, with an emphasis on theoretical understanding & practical exercises. The course will combine, and alternate, between mathematical theoretical foundations and practical computational aspects in python.

The main website of the course will be: https://idephics.github.io/FundamentalLearningEPFL2021/

Content

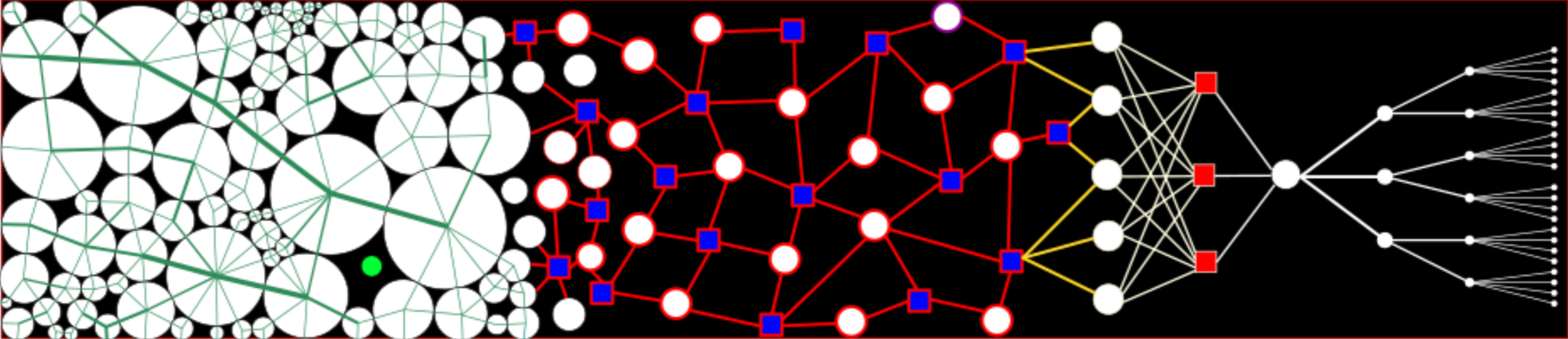

The topics will be chosen from the following basic outline:

- Statistical inference: Estimators, Bias-Variance, Consistency, Efficiency, Maximum likelihood, Fisher Information.

- Bayesian inference, Priors, A posteriori estimation, Expectation-Minimization.

- Supervised learning : Linear Regression, Ridge, Lasso, Sparse problems, high-dimensional Data, Kernel methods, Boosting, Bagging. K-NN, Support Vector Machines, logistic regression, Optimal Margin Classifier

- Statistical learning theory: VC Bounds and Uniform convergence, Implicit regularisation, Double-descent

- Unsupervised learning : Mixture Models, PCA & Kernel PCA, k-means

- Deep learning: multi-layer nets, convnets, auto-encoder, Gradient-descent algorithms

- Basics of Generative models & Reinforcement learning

- Professor: Florent Krzakala

- Teacher: Luca Arnaboldi

- Teacher: Luca Pesce

- Teacher: Hugo Jules Tabanelli

- Teacher: Jivan Waber

- Teacher: Xiaohang Yu